Table of contents

Since launching our switchable models capability a few months ago, customers have flocked to the new feature. Tabnine’s users have raved about the ability to use any LLM they choose within a generative AI tool purpose-built to speed up software development. Individuals and small teams can switch on-the-fly between a variety of Tabnine-developed and third-party LLMs, choosing the best model to meet the requirements of any given project.

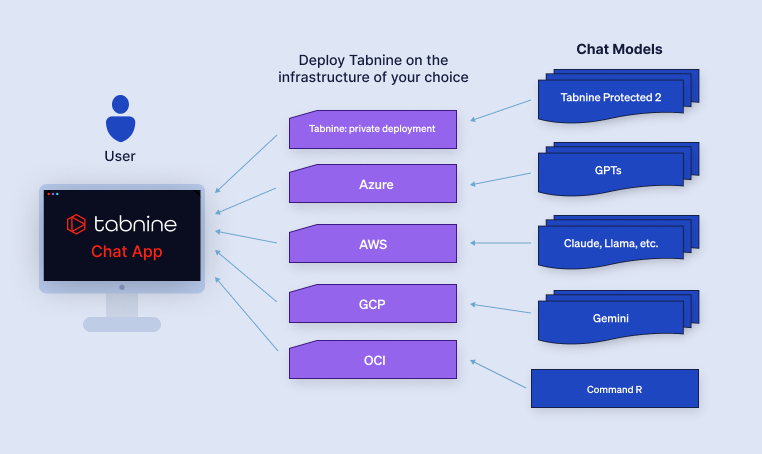

But how does it work if you deploy Tabnine within your own private (or even air-gapped) environments? How does an administrator control which models are available to their teams? What if you want to connect with the model endpoints you purchase directly from the LLM providers?

In this blog post, we’ll cover how customers that self-deploy Tabnine can gain the flexibility of switchable models without sacrificing privacy and control. We’ll show how to select which models are available to their teams and how to connect Tabnine to your own private LLM instance(s).

Historically, when an engineering team selects an AI software development tool, they got both the application (the AI code assistant) and a single underlying model that it runs on (e.g., GitLab’s use of Google’s Vertex Codey, Copilot’s use of OpenAI). But many engineering teams have different expectations or requirements than the parameters used to create any one specific model. If they want to take advantage of an innovation produced by an LLM other than what powers their current AI code assistant, or if they want different models to support different use cases, they’re stuck.

That all changes with Tabnine’s switchable models.

With switchable models, you select the LLM that underpins the chat interface for our AI-powered software development tools. Tabnine is built to make the most of any LLM it connects to, giving engineering teams the best of two worlds: the rich understanding of programming languages and software development methods native within the LLMs, along with the AI agents and developer experience Tabnine has developed to best exploit that understanding and fit neatly into development workflows.

Tabnine leverages these LLMs to power our full suite of AI capabilities and agents, including code generation, code explanations, documentation generation, and automatically generated tests. In addition, Tabnine leverages enterprise-specific context to offer highly personalized recommendations based on an understanding of both the code and information accessible from each developer’s IDE awareness of a team’s full codebase(s) and critical noncode information.

Tabnine’s switchable models capability allows enterprises to take advantage of the innovations that come from a variety of purpose-built and powerful LLMs without compromising their control over the deployment.

Tabnine customers can select the right model for a specific project, use case, or to meet the requirements of specific teams. Most importantly, Tabine administrators have absolute control over which models are made available to their teams, and how they connect to each of those models (via private deployment of the model, dedicated model endpoints, or general API access).

Tabnine administrators have the freedom to choose the LLM(s) that are used to power our AI chat. They can select from multiple Tabnine-developed models as well as popular models from third parties, including OpenAI’s GPT-4o, Anthropic’s Claude 3.5 Sonnet, Cohere’s Command R, and Mistral’s Codestral.

Administrators get complete control over how they connect to these models.

In Tabnine’s SaaS environment, admins consume the third-party models via each model’s API. Once the admins accept the terms of use of the desired models, they become available to the organization and admins can easily enable (or disable) them based on the projects or use cases.

You can also choose to connect to your own private model endpoints. Administrators can connect Tabnine to their company’s existing private LLM instance(s) and make that model available to their engineering team. Simply connect using your authentication key and private endpoint location and the model will appear in the list of all the available LLMs that can be used with our AI chat.

Tabnine supports all major cloud providers. You can deploy Tabnine in your AWS VPC and connect it privately to models like Anthropic Claude 3.5 Sonnet, or connect to Cohere Command R running alongside Tabnine on your OCI instance. You have full control when connecting to private LLMs.

If you’re an existing Tabnine customer, contact our Support team for help choosing and connecting to the right models for your team today. Not yet a customer? Give us a chance to demonstrate how Tabnine delivers better results by providing the AI software development platform built around you.